Several events aligned recently that rekindled an old flame:

- Microsoft HoloLens was announced

- I’ve recently gotten into 3d printing

- I attended a Hackathon as part of my day job at Microsoft where I met the very talented David Catuhe of Babylon.js fame

This motivated me to get back into the swing of 3d, so I decided to build a small app that loads various 3d printing file formats (starting with STL) into Babylon.js courtesy of my HybridWebApp Framework (no HoloLens…yet).

Step 1

Learn the .babylon file format

This part was easy as the format is well documented and the source code for the loader, easily readable.

I learned that a .babylon file with a single mesh should look similar to this once exported:

{

"cameras": null,

"meshes": [

{

"name": "myModel",

"position": [ 0.0, 0.0, 0.0 ],

"rotation": [ 0.0, 0.0, 0.0 ],

"scaling": [ 1.0, 1.0, 1.0 ],

"infiniteDistance": false,

"isVisible": true,

"isEnabled": true,

"pickable": false,

"applyFog": false,

"alphaIndex": 0,

"billboardMode": 0,

"receiveShadows": false,

"checkCollisions": false,

"positions": [],

"normals": [],

"uvs": null,

"indices": []

}

]

}

So I created some simple C# models to represent the mesh/file structure:

public class BabylonMesh

{

public string Name { get; set; }

public float[] Position { get; set; }

public float[] Rotation { get; set; }

public float[] Scaling { get; set; }

public bool InfiniteDistance { get; set; }

public bool IsVisible { get; set; }

public bool IsEnabled { get; set; }

public bool Pickable { get; set; }

public bool ApplyFog { get; set; }

public int AlphaIndex { get; set; }

public BillboardMode BillboardMode { get; set; }

public bool ReceiveShadows { get; set; }

public bool CheckCollisions { get; set; }

public float[] Positions { get; set; }

public float[] Normals { get; set; }

public float[] Uvs { get; set; }

public int[] Indices { get; set; }

}

public class BabylonFile

{

public IEnumerable<BabylonCamera> Cameras { get; set; }

public IEnumerable<BabylonMesh> Meshes { get; set; }

}

Step 2

Learn about the STL file format

As it turns out, STL (or STereoLithography) is a really old format published by 3D Systems in the late 80’s that has both a binary representation and an ASCII representation. Wikipedia gave a pretty good outline, however I preferred the reference on fabbers spec page.

I decided to implement both ASCII and binary as I noticed Sketchfab and other sites had both derivatives for download.

In essence, STL is a simple format that stores normals and facets (aka vertices)…and that’s it. No materials or colour data, just triangles. This made it fairly simple to convert, although I needed to brush up on my knowledge of vertex and index buffers – the Rendering from Vertex and Index Buffers article on MSDN was a great reference.

Step 3

Get Babylon.js running in a WebView

There were a few different ways to approach this and I decided rather than hosting the html on Azure and thus creating an internet dependency on the app, I would simply host the HTML within the app package and inject the mesh into the scene when it was converted.

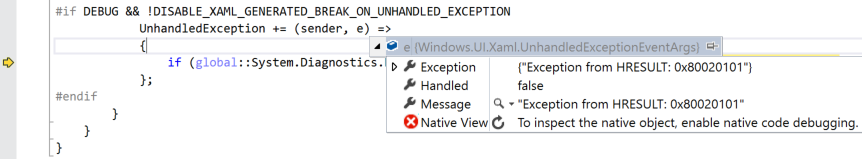

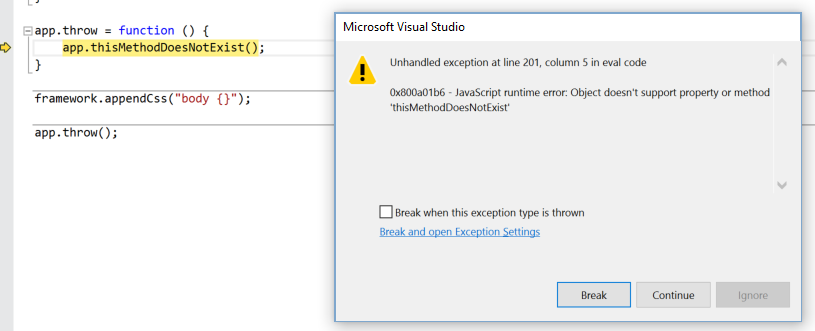

As it turns out, there was a bug in my HybridWebApp Framework that broke loading of local HTML, so after a slight detour fixing that bug I was back on track.

The various components of the app ended up looking like this:

MainPage.xaml

<Page

x:Class="BabylonJs.WebView.MainPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:local="using:BabylonJs.WebView"

xmlns:toolkit="using:HybridWebApp.Toolkit.Controls"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

mc:Ignorable="d">

<Grid Background="{ThemeResource ApplicationPageBackgroundThemeBrush}">

<ProgressBar Margin="0,10,0,0"

IsIndeterminate="True"

Loaded="ProgressBar_Loaded"

Visibility="Collapsed"

VerticalAlignment="Top"

HorizontalAlignment="Stretch" />

<toolkit:HybridWebView x:Name="WebHost" Margin="0,20,0,0" WebUri="ms-appx-web:///www/app.html" Ready="WebHost_Ready" MessageReceived="WebHost_MessageReceived" EnableLoadingOverlay="False" NavigateOnLoad="False" />

</Grid>

<Page.BottomAppBar>

<CommandBar x:Name="CommandBar">

<CommandBar.PrimaryCommands>

<AppBarButton Icon="OpenFile" Click="ImportStlFile_Click" Label="Import" />

<AppBarButton x:Name="SaveButton" IsEnabled="False" Icon="Save" Click="SaveConverted_Click" Label="Save" />

</CommandBar.PrimaryCommands>

</CommandBar>

</Page.BottomAppBar>

</Page>

app.html

<!DOCTYPE html>

<html lang="en" xmlns="http://www.w3.org/1999/xhtml">

<head>

<meta charset="utf-8" />

<style>

html, body {

overflow: hidden;

width: 100%;

height: 100%;

margin: 0;

padding: 0;

}

canvas {

width: 100%;

height: 100%;

touch-action: none;

}

</style>

<script src="ms-appx-web:///www/js/cannon.js"></script>

<script src="ms-appx-web:///www/js/Oimo.js"></script>

<script src="ms-appx-web:///www/js/babylon.2.0.js"></script>

<script src="ms-appx-web:///www/js/app.js"></script>

</head>

<body>

<canvas id="canvas"></canvas>

</body>

</html>

app.js

/// <reference path="babylon.2.0.debug.js" />

app = {};

app._engine = null;

app._scene = null;

app._canvas = null;

app._transientContents = [];

app.initScene = function (canvasId) {

var canvas = document.getElementById(canvasId);

var engine = new BABYLON.Engine(canvas, true);

var scene = new BABYLON.Scene(engine);

var dirLight = new BABYLON.DirectionalLight('dirLight', new BABYLON.Vector3(0,1,0), scene);

dirLight.diffuse = new BABYLON.Color3(0.1, 0.2, 0.3);

var arcRotateCamera = new BABYLON.ArcRotateCamera("arcCamera", 1, 0.8, 10, BABYLON.Vector3.Zero(), scene);

arcRotateCamera.target = new BABYLON.Vector3(0, 10, 0);

scene.activeCamera = arcRotateCamera;

scene.activeCamera.attachControl(canvas, true);

var debugLayer = new BABYLON.DebugLayer(scene);

debugLayer.show(true);

this._canvas = canvas;

this._engine = engine;

this._scene = scene;

engine.runRenderLoop(function () {

arcRotateCamera.alpha += 0.001;

scene.render();

});

}

C# code to init Babylon.js (in MainPage.xaml.cs)

private void WebHost_Ready(object sender, EventArgs e)

{

WebHost.WebRoute.Map("/", async (uri, success, errorCode) =>

{

if (success)

{

await WebHost.Interpreter.EvalAsync("app.initScene('canvas');");

}

}

}

As it turns out, Babylon.js (and thus WebGL) works like any other webpage when hosted inside the WebView. No magic required.

Step 4

Glue it all together!

Now that I had the pieces in place, it was a simple matter of writing some JavaScript for scene management (clear the old mesh, load the new mesh, position the camera) and proxying the converted .babylon file into the WebView to be rendered by Babylon.js.

The StlConverter is fairly simple to use, taking a Stream as the constructor parameter with a single ToJsonAsync method that performs the conversion:

var s = await file.OpenReadAsync();

var converter = new StlConverter(s.AsStream());

var result = await converter.ToJsonAsync();

Sending the result of this from the host app to the website is simple also:

await WebHost.Interpreter.EvalAsync(string.Format("app.loadBabylonModel('{0}');", result));

The called function looks like this and does the actual loading of the mesh:

app.loadBabylonModel = function (json) {

var dataUri = "data:" + json;

var scene = this._scene;

var canvas = this._canvas;

var transientContents = this._transientContents;

BABYLON.SceneLoader.ImportMesh("", "/", dataUri, scene, function (meshArray) {

meshArray[0].position = new BABYLON.Vector3(0, 0, 0);

meshArray[0].rotation = new BABYLON.Vector3(0, 0, 0);

meshArray[0].scaling = new BABYLON.Vector3(1, 1, 1);

scene.activeCamera.setPosition(new BABYLON.Vector3(0, meshArray[0].getBoundingInfo().boundingBox.center.y, meshArray[0].getBoundingInfo().boundingSphere.radius * 4));

scene.activeCamera.target = new BABYLON.Vector3(0, meshArray[0].getBoundingInfo().boundingBox.center.y, 0);

//put standard material onto the mesh

var material = new BABYLON.StandardMaterial("", scene);

material.emissiveColor = new BABYLON.Color3(105 / 255, 113 / 255, 121 / 255);

material.specularColor = new BABYLON.Color3(1.0, 0.2, 0.7);

material.backFaceCulling = false;

meshArray[0].material = material;

framework.scriptNotify(JSON.stringify({ type: 'log', payload: 'mesh imported, array length was ' + meshArray.length }));

transientContents.push(meshArray[0]);

});

}

Voila, we now have a loaded mesh in the scene:

While at the time of writing there are some issues with the normals that are causing inconsistent lighting, I’m otherwise quite happy with how this little project has panned out. I plan to support additional file formats in future also (AMF, OBJ) and make better use of web -> host app communication.

The full source code to accompany the article is available on Github: https://github.com/craigomatic/BabylonJS-Framework